Did MIT hold back AI for decades?

The fight between two approaches, Symbolic AI and Connectionism, has shaped the field of AI since the 50s. Is it possible that Symbolic AI held AI back by decades?

Symbolic AI vs Connectionism

Symbolic AI and Connectionism are two contrasting approaches to artificial intelligence (AI), both aiming to recreate human intelligence through distinct methods.

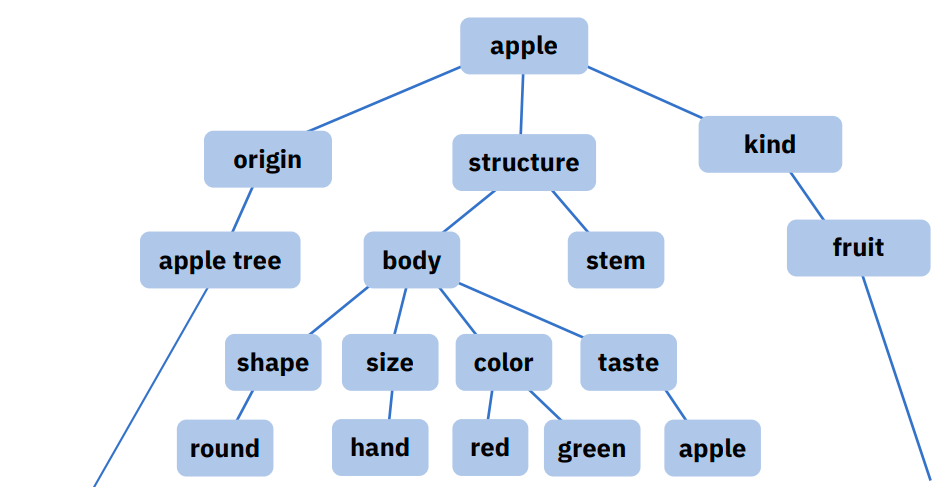

Symbolic AI, also known as "Good Old-Fashioned AI" (GOFAI), focuses on the development of rule-based systems that manipulate symbols and apply logical reasoning to solve problems. These systems are designed to imitate human thought processes by utilizing symbolic representations and formal logic, which are believed to underlie human cognition.

On the other hand, connectionism is an approach inspired by the neural networks found in biological systems, specifically the human brain. Connectionist models, often referred to as artificial neural networks (ANNs), comprise interconnected nodes or neurons that process and transmit information. These networks learn to recognize patterns and make decisions by adjusting the strength of connections between nodes based on input data. This learning process is achieved through training, where the network is exposed to a large number of examples, allowing it to gradually improve its performance.

Symbolic AI can be compared to building a LEGO castle using an instruction manual, where each block has a specific meaning and place. On the other hand, Connectionist AI is more like growing a plant, where it learns and adapts over time without specific instructions.

The Symbolic AI crowd believes that if a smart enough programmer wrote down all the instructions for how to think, we would have a formal model of what thinking is. In contrast, the connectionist contingent thinks that there is no way around letting the system learn from data. In this approach, the intelligence doesn't come from the programmer, but from the information provided in the environment.

The ongoing debate between symbolic AI and connectionism has significantly influenced AI research over the years. This intellectual tug-of-war has led to the rise and fall of various methodologies, ultimately shaping the direction and progress of AI development. While symbolic AI emphasizes structured knowledge and rule-based systems, connectionism highlights the importance of learning through experience and adapting to new information. The interplay between these two approaches continues to impact the evolution of AI as researchers strive to develop more advanced and efficient models of intelligence.

The Key Players

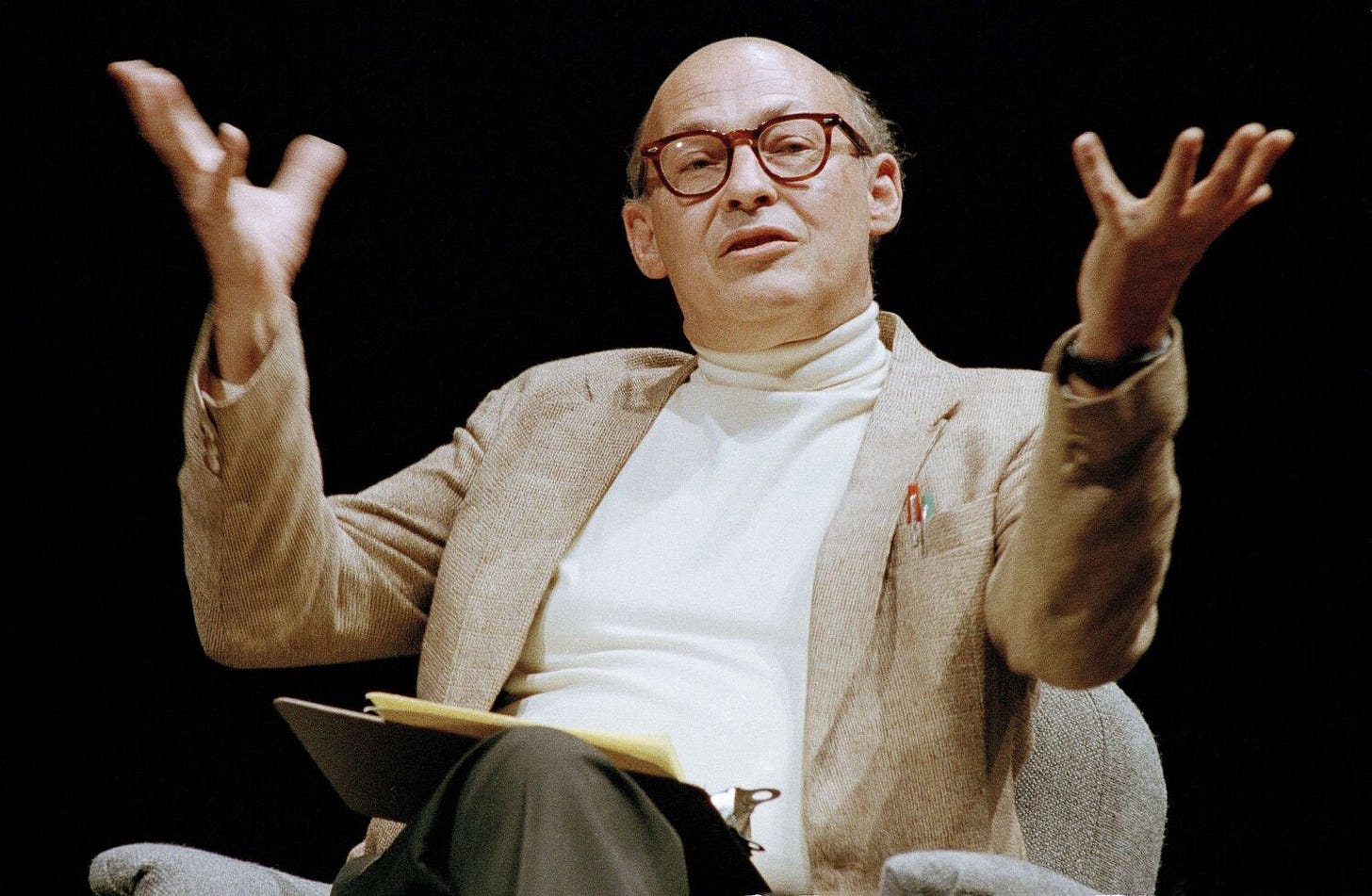

Heinz von Foerster

Heinz von Foerster, an Austrian-American physicist, and Marvin Minsky, an American cognitive scientist, were key players in this AI tug-of-war. Von Foerster rose to prominence after attending the Macy Conferences on Cybernetics in New York. The Macy Conferences were a series of highly impactful interdisciplinary meetings held in New York between 1946 and 1953, aimed at exploring the potential of cybernetics. Cybernetics is a field that seeks to find general patterns and structures that can be applied across different systems, such as biological, social, and technological systems, in order to improve their efficiency, adaptability, and overall performance.

Among the influential attendees were incredible thinkers such as John von Neumann, whose work spanned an extreme multitude of fields from physics to computing and economics. In fact all modern computers are built on an architecture called the "Von Neumann architecture". Von Foerster was an upstart from a physics background who only got into computer intelligence and rose to prominence because he had stumbled into the Macy conferences almost by accident.

Von Foerster later founded the Biological Computer Lab (BCL) at the University of Illinois in 1958. The BCL focused on self-organization (a process whereby a system spontaneously forms an orderly structure without external guidance), learning, and artificial neural networks, drawing inspiration from biological systems.

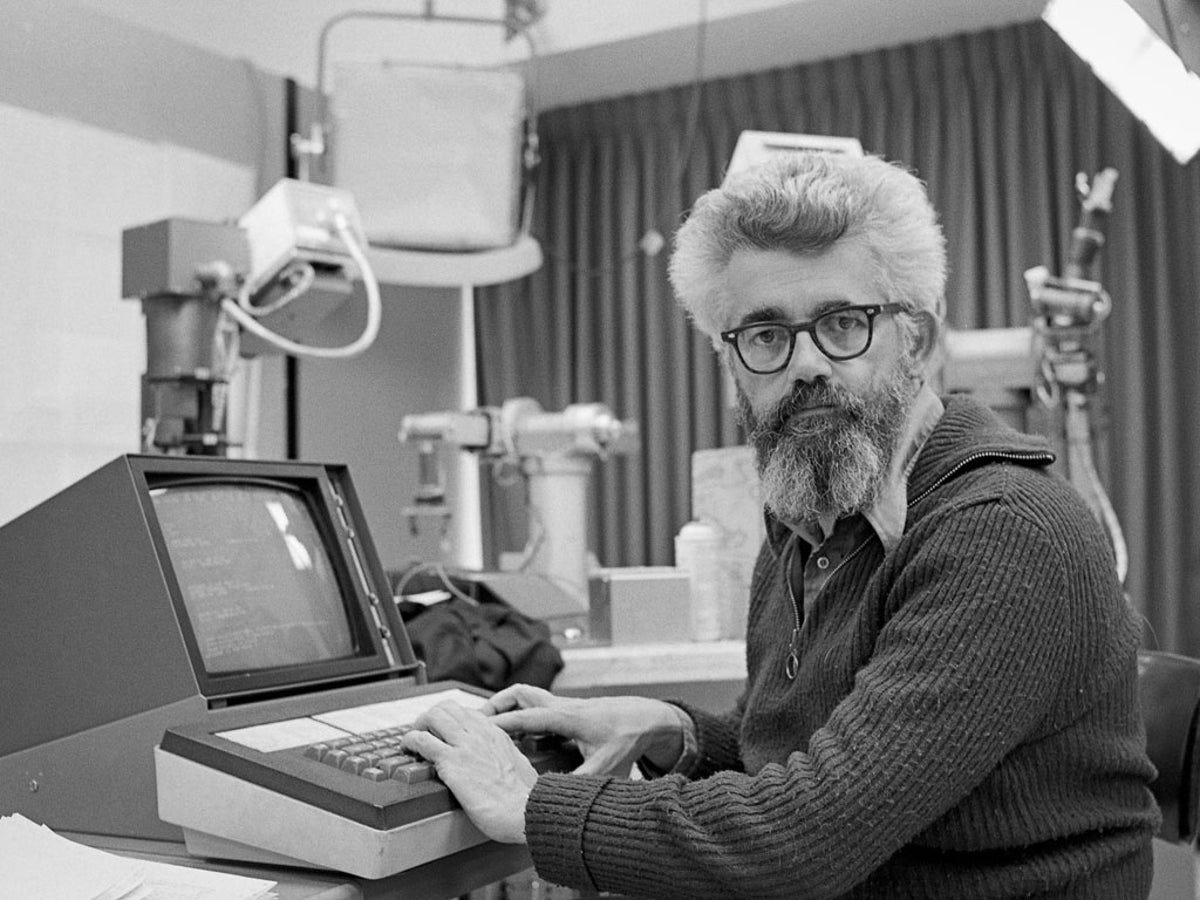

Marvin Minsky & John McCarthy

Marvin Minsky, on the other hand, began his journey as a teenager by building mechanical contraptions focused around computation. After showcasing his early aptitude for engineering and robotics, he studied at Harvard and Princeton, and later co-founded the MIT Artificial Intelligence Laboratory with John McCarthy in 1959. The MIT AI Lab championed the symbolic AI approach, which aimed to understand and replicate human intelligence by breaking down complex tasks into simpler, rule-based subtasks. This method of AI research involved creating algorithms and representations that could simulate cognitive processes. Minsky and his colleagues believed that by emulating these processes, they could eventually develop machines capable of human-like problem-solving and reasoning.

Where von Foerster spoke in the obscure language of the cyberneticians and called his machine learning research "second-order cybernetics", Minsky and McCarthy had a knack for popularizing their field and promising fast advances. Branding their research (and inventing the term) "Artificial Intelligence" they managed to inspire the public with a future that was very close.

The Clash

In the 1960s, the philosophical clash between the BCL and the MIT AI Lab deepened. As symbolic AI gained popularity and funding by promising fast results, the BCL's connectionist approach faced challenges in securing resources for research. The connectionists were more focused on foundational research and didn't promise immediate results. In a constrained funding environment this was a death sentence. The BCL was disbanded in 1974, marking the end of an era in AI research. Reasons for its closure included budget cuts, changes in the academic environment, and the growing dominance of symbolic AI. The demise of the BCL signified a temporary setback for alternative AI approaches.

With the closure of the BCL and the dominance of symbolic AI, resources for alternative approaches like neural networks were scarce. AI research became heavily focused on rule-based systems, limiting the development of techniques that would later give rise to large language models (LLMs) like ChatGPT. Despite the constraints, a few dedicated researchers continued to explore the potential of neural networks.

Impact on Modern AI

During the period of symbolic AI's dominance, researchers such as Geoffrey Hinton, Yann LeCun, and Yoshua Bengio persisted in their exploration of neural networks. These pioneers faced numerous challenges due to limited resources and support, but their dedication to connectionism eventually paid off. In the late 1980s and early 1990s, neural networks regained popularity with the development of more advanced algorithms and learning techniques, such as backpropagation. This resurgence was further fueled by the increasing availability of computational power and data, which allowed researchers to build more sophisticated models and make significant progress in areas like deep learning and natural language processing. As computational power and data availability increased over time, these pioneers were able to develop more advanced algorithms and learning techniques. This persistence eventually led to breakthroughs in deep learning and natural language processing, paving the way for the emergence of powerful large language models like ChatGPT that have transformed the AI landscape.

One of the key factors explaining why neural networks and in general connectionist approaches win so decisively against symbolic approaches is that the limiting factor for intelligence isn't how fast your computer is, but how much information you have available. Achim Hoffman explored this in his 1990s seminal paper on "General Limitations on Machine Learning". Trying to write a system by hand that acts intelligently would require us to input all of the information ahead of time by hand. But mostly intelligence is just learning from data, which means building a system that can find patterns in data. Up till now all computational learning systems have been severely constrained in the data available to them.

Biologists Humberto Maturana and Francisco Varela explored the idea that all life itself is a cognitive process in their 1980s book "Autopoeisis and Cognition". They argue that because that all living systems are fundamentally cognitive because they self-organize in order to create and maintain their structure. This self-organizing nature allows organisms to process information, adapt, and respond to changes in their environment, regardless of whether they possess a nervous system or not.

Neo-Yuddism

With all the successes of GPT-4 and the connectionist approach you'd think the debate is settled. But the Symbolic AI crowd around people like Eliezer Yudkowski feel scared by the dynamic nature of intelligence. They were hoping that it would be possible for very smart humans to write the AI in a very formal and structured way. But now the AI we actually know how to build simply learns from the information we have available in the world.

With a looming public discourse around AI safety, the irony at the heart of the issue is that the Symbolic AI crowd who first invented the marketing term "Artificial Intelligence" in the late 50s to concentrate funding and starve their connectionist competition, are now uses the same term to scare the public of something that's very far from "Artificial Intelligence".

So far what LLMs (Large Language Models) have done is to very effectively compress large sets of information to find the patterns within them. See What it is doing is less like "figuring out" and more like "already knowing".

GPT-4 has consumed the world's information, but it's not actually good at reasoning about it. The progress that has been made is exciting, and the applications are truly inspiring. But just like the AI crowd in the 70s got excited by how close they thought they were to AI, they are now scared by how close they think AI is despite this being far from the truth.

The reality is more complicated: We've made a ton of progress in compressing existing information into queryable models, but we've made very little progress in increasing the amount of information available in a self-organizing way to truly move forward. To acquire more information beyond a certain point requires interaction with the world. The running of experiments. And to run experiments about the nature of reality requires resources, and time, and patience. Because while computation is fast, the world is slow. As LLMs get more data they will learn. But the speed of that learning will be limited by how much data can ingest, and the world simply does not hold enough data for them to progress all that much beyond where they are now.

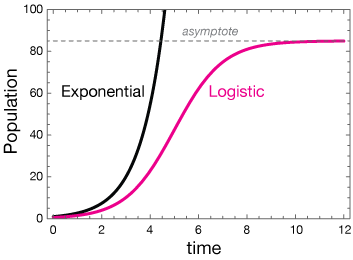

While AI companies are still improving their techniques, they can squeeze additional information out of the existing data, and through data sharing deals they can acquire more data. But not substantial amounts. Once the incremental improvements and the data run dry, the improvements might be closer to resembling a logistical curve than an exponential one.

The path from here to true AI might be longer than the path from the 70s to here. What I wrote in 2016 still holds true: We are still limited by data, just like we have been all along, and we have not yet found a way to increase the amount of training data available by the orders of magnitude required. We should savor the amazing bounties we can get from the progress that was made and build amazing companies and tools based on LLMs, GANs, and other neural networks without succumbing to Neo-Yuddism because we are scared of something that might not happen in our lifetime.

As you can see, the debate between the Symbolic AI followers who insist that we need to have a formal understanding of how intelligence works before we're allowed to build better information querying systems, and the connectionists who embrace that knowledge acquisition is a dynamic process and both takes time and is always limited by the amount of available information, is far from over.

It's interesting to reflect on how these fundamental philosophical disagreements can reverberate for decades in the discourse and shape the direction of research and funding allocation. It will be intriguing to see if and when this debate can ever be settled.